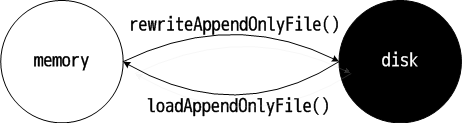

AOF 持久化运作机制

和 redis RDB 持久化运作机制不同,redis AOF 有后台执行和边服务边备份两种方式。

1)AOF 后台执行的方式和 RDB 有类似的地方,fork 一个子进程,主进程仍进行服务,子进程执行 AOF 持久化,数据被 dump 到磁盘上。与 RDB 不同的是,后台子进程持久化过程中,主进程会记录期间的所有数据变更(主进程还在服务),并存储在 server.aof_rewrite_buf_blocks 中;后台子进程结束后,redis 更新缓存追加到 AOF 文件中,是 RDB 持久化所不具备的。

来说说更新缓存这个东西。redis 服务器产生数据变更的时候,譬如 set name Jhon,不仅仅会修改内存数据集,也会记录此更新(修改)操作,记录的方式就是上面所说的数据组织方式。

更新缓存可以存储在 server.aof_buf 中,你可以把它理解为一个小型临时中转站,所有累积的更新缓存都会先放入这里,它会在特定时机写入文件或者插入到 server.aof_rewrite_buf_blocks 下链表(下面会详述);server.aof_buf 中的数据在 propagrate() 添加,在涉及数据更新的地方都会调用 propagrate() 以累积变更。更新缓存也可以存储在 server.aof_rewrite_buf_blocks,这是一个元素类型为 struct aofrwblock 的链表,你可以把它理解为一个仓库,当后台有 AOF 子进程的时候,会将累积的更新缓存(在 server.aof_buf 中)插入到链表中,而当 AOF 子进程结束,它会被整个写入到文件。两者是有关联的。

这里的意图即是不用每次出现数据变更的时候都触发一个写操作,可以将写操作先缓存到内存中,待到合适的时机写入到磁盘,如此避免频繁的写操作。当然,完全可以实现让数据变更及时更新到磁盘中。两种做法的好坏就是一种博弈了。

下面是后台执行的主要代码:

// 启动后台子进程,执行 AOF 持久化操作。bgrewriteaofCommand(),startAppendOnly(),

// serverCron() 中会调用此函数

/* This is how rewriting of the append only file in background works:

*

* 1) The user calls BGREWRITEAOF

* 2) Redis calls this function, that forks():

* 2a) the child rewrite the append only file in a temp file.

* 2b) the parent accumulates differences in server.aof_rewrite_buf.

* 3) When the child finished '2a' exists.

* 4) The parent will trap the exit code, if it's OK, will append the

* data accumulated into server.aof_rewrite_buf into the temp file, and

* finally will rename(2) the temp file in the actual file name.

* The the new file is reopened as the new append only file. Profit!

*/

int rewriteAppendOnlyFileBackground(void) {

pid_t childpid;

long long start;

// 已经有正在执行备份的子进程

if (server.aof_child_pid != -1) return REDIS_ERR;

start = ustime();

if ((childpid = fork()) == 0) {

char tmpfile[256];

// 子进程

/* Child */

// 关闭监听

closeListeningSockets(0);

// 设置进程 title

redisSetProcTitle("redis-aof-rewrite");

// 临时文件名

snprintf(tmpfile,256,"temp-rewriteaof-bg-%d.aof", (int) getpid());

// 开始执行 AOF 持久化

if (rewriteAppendO nlyFile(tmpfile) == REDIS_OK) {

// 脏数据,其实就是子进程所消耗的内存大小

// 获取脏数据大小

size_t private_dirty = zmalloc_get_private_dirty();

// 记录脏数据

if (private_dirty) {

redisLog(REDIS_NOTICE,

"AOF rewrite: %zu MB of memory used by copy-on-write",

private_dirty/(1024*1024));

}

exitFromChild(0);

} else {

exitFromChild(1);

}

} else {

/* Parent */

server.stat_fork_time = ustime()-start;

if (childpid == -1) {

redisLog(REDIS_WARNING,

"Can't rewrite append only file in background: fork: %s",

strerror(errno));

return REDIS_ERR;

}

redisLog(REDIS_NOTICE,

"Background append only file rewriting started by pid %d",childpid);

// AOF 已经开始执行,取消 AOF 计划

server.aof_rewrite_scheduled = 0;

// AOF 最近一次执行的起始时间

server.aof_rewrite_time_start = time(NULL);

// 子进程 ID

server.aof_child_pid = childpid;

updateDictResizePolicy();

// 因为更新缓存都将写入文件,要强制产生选择数据集的指令 SELECT ,以防出现数据

// 合并错误。

/* We set appendseldb to -1 in order to force the next call to the

* feedAppendOnlyFile() to issue a SELECT command, so the differences

* accumulated by the parent into server.aof_rewrite_buf will start

* with a SELECT statement and it will be safe to merge. */

server.aof_selected_db = -1;

replicationScriptCacheFlush();

return REDIS_OK;

}

return REDIS_OK; /* unreached */

}

如上,子进程执行 AOF 持久化,父进程则会记录一些 AOF 的执行信息。下面来看看 AOF 持久化具体是怎么做的?

// AOF 持久化主函数。只在 rewriteAppendOnlyFileBackground() 中会调用此函数

/* Write a sequence of commands able to fully rebuild the dataset into

* "filename". Used both by REWRITEAOF and BGREWRITEAOF.

*

* In order to minimize the number of commands needed in the rewritten

* log Redis uses variadic commands when possible, such as RPUSH, SADD

* and ZADD. However at max REDIS_AOF_REWRITE_ITEMS_PER_CMD items per time

* are inserted using a single command. */

int rewriteAppendOnlyFile(char *filename) {

dictIterator *di = NULL;

dictEntry *de;

rio aof;

FILE *fp;

char tmpfile[256];

int j;

long long now = mstime();

/* Note that we have to use a different temp name here compared to the

* one used by rewriteAppendOnlyFileBackground() function. */

snprintf(tmpfile,256,"temp-rewriteaof-%d.aof", (int) getpid());

// 打开文件

fp = fopen(tmpfile,"w");

if (!fp) {

redisLog(REDIS_WARNING, "Opening the temp file for AOF rewrite in"

"rewriteAppendOnlyFile(): %s", strerror(errno));

return REDIS_ERR;

}

// 初始化 rio 结构体

rioInitWithFile(&aof,fp);

// 如果设置了自动备份参数,将进行设置

if (server.aof_rewrite_incremental_fsync)

rioSetAutoSync(&aof,REDIS_AOF_AUTOSYNC_BYTES);

// 备份每一个数据集

for (j = 0; j < server.dbnum; j++) {

char selectcmd[] = "*2\r\n$6\r\nSELECT\r\n";

redisDb *db = server.db+j;

dict *d = db->dict;

if (dictSize(d) == 0) continue;

// 获取数据集的迭代器

di = dictGetSafeIterator(d);

if (!di) {

fclose(fp);

return REDIS_ERR;

}

// 写入 AOF 操作码

/* SELECT the new DB */

if (rioWrite(&aof,selectcmd,sizeof(selectcmd)-1) == 0) goto werr;

// 写入数据集序号

if (rioWriteBulkLongLong(&aof,j) == 0) goto werr;

// 写入数据集中每一个数据项

/* Iterate this DB writing every entry */

while((de = dictNext(di)) != NULL) {

sds keystr;

robj key, *o;

long long expiretime;

keystr = dictGetKey(de);

o = dictGetVal(de);

// 将 keystr 封装在 robj 里

initStaticStringObject(key,keystr);

// 获取过期时间

expiretime = getExpire(db,&key);

// 如果已经过期,放弃存储

/* If this key is already expired skip it */

if (expiretime != -1 && expiretime < now) continue;

// 写入键值对应的写操作

/* Save the key and associated value */

if (o->type == REDIS_STRING) {

/* Emit a SET command */

char cmd[]="*3\r\n$3\r\nSET\r\n";

if (rioWrite(&aof,cmd,sizeof(cmd)-1) == 0) goto werr;

/* Key and value */

if (rioWriteBulkObject(&aof,&key) == 0) goto werr;

if (rioWriteBulkObject(&aof,o) == 0) goto werr;

} else if (o->type == REDIS_LIST) {

if (rewriteListObject(&aof,&key,o) == 0) goto werr;

} else if (o->type == REDIS_SET) {

if (rewriteSetObject(&aof,&key,o) == 0) goto werr;

} else if (o->type == REDIS_ZSET) {

if (rewriteSortedSetObject(&aof,&key,o) == 0) goto werr;

} else if (o->type == REDIS_HASH) {

if (rewriteHashObject(&aof,&key,o) == 0) goto werr;

} else {

redisPanic("Unknown object type");

}

// 写入过期时间

/* Save the expire time */

if (expiretime != -1) {

char cmd[]="*3\r\n$9\r\nPEXPIREAT\r\n";

if (rioWrite(&aof,cmd,sizeof(cmd)-1) == 0) goto werr;

if (rioWriteBulkObject(&aof,&key) == 0) goto werr;

if (rioWriteBulkLongLong(&aof,expiretime) == 0) goto werr;

}

}

// 释放迭代器

dictReleaseIterator(di);

}

// 写入磁盘

/* Make sure data will not remain on the OS's output buffers */

fflush(fp);

aof_fsync(fileno(fp));

fclose(fp);

// 重写文件名

/* Use RENAME to make sure the DB file is changed atomically only

* if the generate DB file is ok. */

if (rename(tmpfile,filename) == -1) {

redisLog(REDIS_WARNING,"Error moving temp append only file on the "

"final destination: %s", strerror(errno));

unlink(tmpfile);

return REDIS_ERR;

}

redisLog(REDIS_NOTICE,"SYNC append only file rewrite performed");

return REDIS_OK;

werr:

// 清理工作

fclose(fp);

unlink(tmpfile);

redisLog(REDIS_WARNING,"Write error writing append only file on disk: "

"%s", strerror(errno));

if (di) dictReleaseIterator(di);

return REDIS_ERR;

}

刚才所说,AOF 在持久化结束后,持久化过程产生的数据变更也会追加到 AOF 文件中。如果有留意定时处理函数 serverCorn():父进程会在子进程结束后,将 AOF 持久化过程中产生的数据变更,追加到 AOF 文件。这就是 backgroundRewriteDoneHandler() 要做的:将 server.aof_rewrite_buf_blocks 追加到 AOF 文件。

// 后台子进程结束后,redis 更新缓存 server.aof_rewrite_buf_blocks 追加到 AOF 文件中

// 在 AOF 持久化结束后会执行这个函数, backgroundRewriteDoneHandler() 主要工作是

// 将 server.aof_rewrite_buf_blocks,即 AOF 缓存写入文件

/* A background append only file rewriting (BGREWRITEAOF) terminated its work.

* Handle this. */

void backgroundRewriteDoneHandler(int exitcode, int bysignal) {

......

// 将 AOF 缓存 server.aof_rewrite_buf_blocks 的 AOF 写入磁盘

if (aofRewriteBufferWrite(newfd) == -1) {

redisLog(REDIS_WARNING,

"Error trying to flush the parent diff to the rewritten AOF: %s",

strerror(errno));

close(newfd);

goto cleanup;

}

......

}

// 将累积的更新缓存 server.aof_rewrite_buf_blocks 同步到磁盘

/* Write the buffer (possibly composed of multiple blocks) into the specified

* fd. If no short write or any other error happens -1 is returned,

* otherwise the number of bytes written is returned. */

ssize_t aofRewriteBufferWrite(int fd) {

listNode *ln;

listIter li;

ssize_t count = 0;

listRewind(server.aof_rewrite_buf_blocks,&li);

while((ln = listNext(&li))) {

aofrwblock *block = listNodeValue(ln);

ssize_t nwritten;

if (block->used) {

nwritten = write(fd,block->buf,block->used);

if (nwritten != block->used) {

if (nwritten == 0) errno = EIO;

return -1;

}

count += nwritten;

}

}

return count;

}

2)边服务边备份的方式,即 redis 服务器会把所有的数据变更存储在 server.aof_buf 中,并在特定时机将更新缓存写入预设定的文件(server.aof_filename)。特定时机有三种:

- 进入事件循环之前

- redis 服务器定时程序 serverCron() 中

- 停止 AOF 策略的 stopAppendOnly() 中

redis 无非是不想服务器突然崩溃终止,导致过多的数据丢失。redis 默认是每隔固定时间进行一次边服务边备份,即隔固定时间将累积的变更的写入文件。

下面是边服务边执行 AOF 持久化的主要代码:

// 同步磁盘;将所有累积的更新 server.aof_buf 写入磁盘

/* Write the append only file buffer on disk.

*

* Since we are required to write the AOF before replying to the client,

* and the only way the client socket can get a write is entering when the

* the event loop, we accumulate all the AOF writes in a memory

* buffer and write it on disk using this function just before entering

* the event loop again.

*

* About the 'force' argument:

*

* When the fsync policy is set to 'everysec' we may delay the flush if there

* is still an fsync() going on in the background thread, since for instance

* on Linux write(2) will be blocked by the background fsync anyway.

* When this happens we remember that there is some aof buffer to be

* flushed ASAP, and will try to do that in the serverCron() function.

*

* However if force is set to 1 we'll write regardless of the background

* fsync. */

void flushAppendOnlyFile(int force) {

ssize_t nwritten;

int sync_in_progress = 0;

// 无数据,无需同步到磁盘

if (sdslen(server.aof_buf) == 0) return;

// 创建线程任务,主要调用 fsync()

if (server.aof_fsync == AOF_FSYNC_EVERYSEC)

sync_in_progress = bioPendingJobsOfType(REDIS_BIO_AOF_FSYNC) != 0;

// 如果没有设置强制同步的选项,可能不会立即进行同步

if (server.aof_fsync == AOF_FSYNC_EVERYSEC && !force) {

// 推迟执行 AOF

/* With this append fsync policy we do background fsyncing.

* If the fsync is still in progress we can try to delay

* the write for a couple of seconds. */

if (sync_in_progress) {

if (server.aof_flush_postponed_start == 0) {

// 设置延迟冲洗时间选项

/* No previous write postponinig, remember that we are

* postponing the flush and return. */

// /* Unix time sampled every cron cycle. */

server.aof_flush_postponed_start = server.unixtime;

return;

// 没有超过 2s,直接结束

} else if (server.unixtime - server.aof_flush_postponed_start < 2) {

/* We were already waiting for fsync to finish, but for less

* than two seconds this is still ok. Postpone again. */

return;

}

// 否则,要强制写入磁盘

/* Otherwise fall trough, and go write since we can't wait

* over two seconds. */

server.aof_delayed_fsync++;

redisLog(REDIS_NOTICE,"Asynchronous AOF fsync is taking too long (disk"

" is busy?). Writing the AOF buffer without waiting for fsync to "

"complete, this may slow down Redis.");

}

}

// 取消延迟冲洗时间设置

/* If you are following this code path, then we are going to write so

* set reset the postponed flush sentinel to zero. */

server.aof_flush_postponed_start = 0;

/* We want to perform a single write. This should be guaranteed atomic

* at least if the filesystem we are writing is a real physical one.

* While this will save us against the server being killed I don't think

* there is much to do about the whole server stopping for power problems

* or alike */

// AOF 文件已经打开了。将 server.aof_buf 中的所有缓存数据写入文件

nwritten = write(server.aof_fd,server.aof_buf,sdslen(server.aof_buf));

if (nwritten != (signed)sdslen(server.aof_buf)) {

/* Ooops, we are in troubles. The best thing to do for now is

* aborting instead of giving the illusion that everything is

* working as expected. */

if (nwritten == -1) {

redisLog(REDIS_WARNING,"Exiting on error writing to the append-only"

" file: %s",strerror(errno));

} else {

redisLog(REDIS_WARNING,"Exiting on short write while writing to "

"the append-only file: %s (nwritten=%ld, "

"expected=%ld)",

strerror(errno),

(long)nwritten,

(long)sdslen(server.aof_buf));

if (ftruncate(server.aof_fd, server.aof_current_size) == -1) {

redisLog(REDIS_WARNING, "Could not remove short write "

"from the append-only file. Redis may refuse "

"to load the AOF the next time it starts. "

"ftruncate: %s", strerror(errno));

}

}

exit(1);

}

// 更新 AOF 文件的大小

server.aof_current_size += nwritten;

// 当 server.aof_buf 足够小,重新利用空间,防止频繁的内存分配。

// 相反,当 server.aof_buf 占据大量的空间,采取的策略是释放空间,可见 redis

// 对内存很敏感。

/* Re-use AOF buffer when it is small enough. The maximum comes from the

* arena size of 4k minus some overhead (but is otherwise arbitrary). */

if ((sdslen(server.aof_buf)+sdsavail(server.aof_buf)) < 4000) {

sdsclear(server.aof_buf);

} else {

sdsfree(server.aof_buf);

server.aof_buf = sdsempty();

}

/* Don't fsync if no-appendfsync-on-rewrite is set to yes and there are

* children doing I/O in the background. */

if (server.aof_no_fsync_on_rewrite &&

(server.aof_child_pid != -1 || server.rdb_child_pid != -1))

return;

// sync,写入磁盘

/* Perform the fsync if needed. */

if (server.aof_fsync == AOF_FSYNC_ALWAYS) {

/* aof_fsync is defined as fdatasync() for Linux in order to avoid

* flushing metadata. */

aof_fsync(server.aof_fd); /* Let's try to get this data on the disk */

server.aof_last_fsync = server.unixtime;

} else if ((server.aof_fsync == AOF_FSYNC_EVERYSEC &&

server.unixtime > server.aof_last_fsync)) {

if (!sync_in_progress) aof_background_fsync(server.aof_fd);

server.aof_last_fsync = server.unixtime;

}

}